In a bizarre turn of events that sounds like a plot from a spy movie, community officials in Wenzhou, Zhejiang Province, China, have been accused of cheating their workplace attendance system using a surprisingly low-tech method: printed paper masks.

This incident has not only sparked outrage regarding workplace ethics but has also raised serious questions about the reliability of widespread biometric security systems. How could a piece of paper fool a machine designed to identify human features?

The “Paper Face” Scam Explained

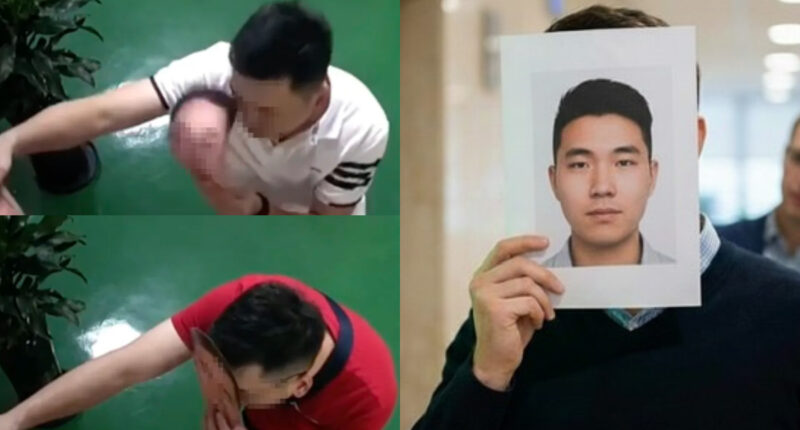

According to reports from South China Morning Post and local media, the scheme unfolded at the Lijia Yang Community committee in Longgang City. Staff members allegedly printed high-resolution photographs of their colleagues’ faces. When a colleague was absent, an accomplice would simply hold the printed photo in front of the facial recognition attendance machine to clock them in.

It is reported that even the Party secretary of the community was involved in this attendance fraud. The complaint, filed by a local resident on October 23, 2025, included surveillance footage allegedly showing officials holding up these paper cutouts to the scanner.

Tech Analysis: Why Did the AI Fail?

For tech enthusiasts, the burning question is why the system failed. Modern facial recognition usually relies on “Liveness Detection” to prevent exactly this kind of fraud. However, many budget-friendly or older attendance systems lack these critical features.

The failure likely occurred due to the absence of:

- 3D Depth Sensing: Advanced cameras use infrared dots to map the depth of a face. A piece of paper is flat (2D), while a real face has curves.

- Micro-movement Analysis: Real humans blink, breathe, and make tiny movements. A photo is static.

- Texture Analysis: High-end AI can distinguish between human skin texture and the reflection of paper or a phone screen.

This suggests that the office was likely using an older, 2D-image-based matching system, which is highly vulnerable to what security experts call “Presentation Attacks.”

The Consequences and Investigation

The Longgang Municipal Committee’s Social Work Department has confirmed that an investigation is underway, with results expected by the end of 2025. While the accused officials have declined to comment, the implications are severe.

In China, where facial recognition is used for everything from payments to subway entry, this incident exposes a “low-tech” loophole in a “high-tech” society. It serves as a warning to businesses globally: Biometric convenience should not come at the cost of security verification.

Final Thoughts

While the image of officials holding up paper masks might seem comical, it highlights a critical flaw in reliance on AI. As technology advances, so too do the methods to deceive it. Organizations must upgrade to systems with Anti-Spoofing capabilities to ensure that the person clocking in is not just a photo, but a present, living employee.